In part one we described the main types of memory. This time we’ll take a look at the memory management issues specific to consoles, or issues that are at least more prevalent in console game programming. The key differences between your regular PC and consoles are:

- No virtual memory, which means once you’re out of memory, you’re out of memory. No swap file is going to save you on a console. Also, this makes memory fragmentation a serious concern.

- Multiple physical memory types. On the PC you have RAM and video RAM, but you have a video driver that manages it for you, not so on consoles.

- Memory alignment is also a problem on consoles. The x86 CPU is very forgiving. However console CPUs are generally stricter. Alignment is also very important for performance.

Now let’s take a look at these issues and others in more detail.

Virtual Memory

Having no virtual memory or swap file is the reason we need to focus so much time and energy on memory management. On a console, when you’ve used all your memory you can’t swap memory to the swap disk. You have no choice but to fit within the limits of the system.

We looked at heap fragmentation in part one, lets take a quick look at how virtual memory helps to resolve the issue on PCs.

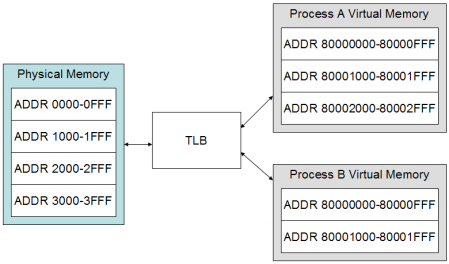

In the above diagram we have 2 processes running on a CPU with 4 pages of physical memory. Each process has it’s own view of memory, you can see they are both using the same memory addresses. In between we see the translation lookaside buffer (or TLB), which is responsible for mapping virtual addresses to physical addresses. When a process accesses memory it the TLB tries to translate the virtual address into a physical one. If it cannot find the virtual address in the TLB a page fault occurs, which causes the OS to take control and rearrange physical memory (e.g. by using the swap file) to ensure the requested virtual address is put somewhere in physical memory. The TLB is what allows two processes to have duplicate addresses, each process maintains its own TLB table. When the OS switches processes it swaps the TLB tables. Incidentally, this is one of the key differences between threads and processes: Threads share TLBs, processes don’t.

So what does this have to do with heap fragmentation? What the TLB is actually doing is providing a layer of indirection between a process’s memory addressing and the actual physical memory addresses.

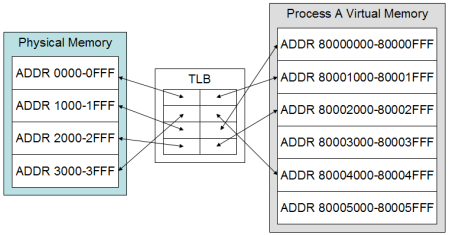

The above diagram shows how the TLB maps virtual memory to physical pages of memory. Fragmentation-wise the important thing to note is that addresses contiguous in virtual memory don’t have to be contiguous in physical memory. This means that fragmentation of physical memory is pretty much impossible. Also, since virtual memory addresses don’t have to correspond to any real memory address, the OS has the freedom of assigning almost any address in the 32-bits allowed when allocating memory. This pretty much means that memory isn’t going to fragment on a system with virtual memory. Not to say that fragmentation is impossible on a PC, just rare.

So, it was already mentioned that consoles aren’t really have a complete virtual memory system, especially since they often don’t have a disk to swap memory to. So physical memory has a fixed layout and can’t be rearranged like a PC can do with it’s swap disk. However, they do have modern CPUs which have ability to do virtual memory addressing via a TLB. It won’t help with the fragmentation problem, but we’ll look at other interesting uses for virtual memory addressing in a later article.

Physical Memory

Physical memory refers to memory that is actually physically different. As in a different physical location in the hardware. Often with different sizes, speeds and access restrictions. The most common example is main (or system) memory and video memory. PCs are built like this too: the CPU has one set of memory and the video card has its own set. On the PC the video memory is generally not directly accessible and is managed by the video driver. On a console it is usually up to the game to manage its own memory.

The different arrangement of physical memory is where most consoles differ from each other (the other main way is the different CPU and GPU configurations). This means that each console can have very different configurations and restrictions when it comes to physical memory types, however the restrictions imposed by physical memory types usually follows a few common patterns.

Here’s some issues that physical memory types can impose

- Different physical addressing. Main memory may have addresses in the range [0x60000000, 6fffffff] where video memory would have the range [0x10000000, 0x1fffffff].

- Different visibility from different devices. Meaning that the main CPU may not be able to access parts of video memory. Similarly the GPU may not access memory in the CPU’s cache. This means that when the CPU creates data for the GPU to use is must make sure the data is flushed from the cache before the GPU accesses it.

- Non-symmetrical access and throughput. Just because a device can read from a memory location doesn’t mean it can write to that same location. It wouldn’t be unheard of for a GPU to be able to read from main memory but be unable to write to main memory. Often devices can both read and write but at different rates. Its also common for the CPU to write to the video memory at 1GB/s but only read at say, 256MB/s. In some cases rates can differ by factors of 10, 100 or more!

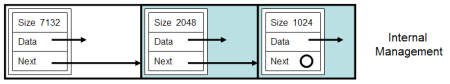

These differences affect how we manage memory. Physical addressing requires that we maintain different heaps for different memory types – the CPU allocates from main memory, GPU data (like textures, meshes) uses video memory. However it also means we need to provide a way of allocating memory of different physical types. Different visibility means that memory shared between devices (like CPU and GPU) may need to be marked as uncached. We’ll have to take that into account when allocating memory too, especially since uncached memory can have severe performance issues if handled improperly. Lastly, non-symmetrical access will affect how we manage physical memory. In part one we created a simple heap allocator. This allocator managed the heap internally, using memory from the actual heap to manage the heap. Our internal heap allocator looked like this

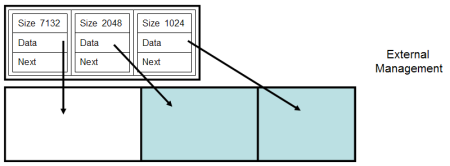

But what if the CPU can’t read or write to the video memory, how would we be expected to manage the heap?

We obviously couldn’t use the same allocation scheme. Instead we’d manage the heap externally, using main memory to allocated blocks of video memory. This would let us allocate memory from the video memory without ever having to read or write to video memory.

It’s a pretty straightforward change from our previous heap manager, but has some additional constraints. The beauty of an internally managed heap is that the number of allocations is simply limited by the amount of memory available to the heap. However this is not the case with an external manager. How much memory do we need to manage the external heap? It’s an open question, since like any other memory there are a number of possibly solutions. From a simple fixed number of maximum allocations to a more dynamic system many solutions are possible. There isn’t necessarily a single best solution.

Alignment

Alignment is a limitation of the hardware which only allows access to data if it is located at certain addresses in memory. For example, a single char variable may be located at any address, but a float variable must be aligned to 4 byte addresses. Misaligned addresses can cause incorrect data being used, or in some cases a crash. Lets look at some sample code:

char buffer[1024]; float c = 3.0f; *(float *)(&buffer[0]) = c; float d = *(float *)(&buffer[0]); printf( "Value: %f\n", d );

All we’re doing is forcing a float variable into a data buffer and reading it back out. What will the output be?

Value: 3.0

Just what we expected.

What if we make a simple change, and instead of storing the float at the beginning of the array, we offset it a single byte:

*(float *)(&buffer[1]) = c; float d = *(float *)(&buffer[1]);

Now what will the output be?

Well, I don’t know! It’s pretty much undefined.

If you run this code on your X86 PC you’ll probably get the expected result. Run this on another type of CPU (like a PowerPC or SPARC) and anything goes. Some CPUs are set to throw an exception, causing the program to halt. Many CPUs will round down unaligned addresses to the expected alignment. So in the previous example

float d = *(float *)(&buffer[1]);

effectively becomes,

float d = *(float *)(&buffer[0]);

Thus reading from the wrong address and causing data to misinterpreted. This usually happens silently, with no error or notification. And it can be very frustrating when trying to debug misaligned data.

So how come programs don’t break all over the place? Because the complier knows the alignment and will place data at the proper alignment. So when you put a float on the stack you’re guaranteed it will have the correct alignment.

Structs & Classes

How does alignment affect the layout of structs and classes? We know the compiler will align data correctly, for a better understanding lets look at some samples.

struct foo

{

char c;

float f;

};

struct bar

{

float f;

char c;

};

Assuming a float requires a 4 byte alignment and char only 1 byte, they’ll look like this in memory.

ADDR 0000 0001 0002 0003 0004 0005 0006 0007 foo c - - - f f f f bar f f f f c

The float f remains aligned to 4 bytes in both cases. With foo we see that the complier has left a few bytes unused after c, this is called padding. Padding means that the size of foo is 8 bytes, even though you might expect it to be 5. What about bar? Well, on most platforms it’s probably 8 bytes too. The complier will by default pad all structs and classes, usually to 4 or 8 bytes or so. The main reason is to avoid misaligned data.

You can override this automatic padding with complier #pragmas:

#pragma pack(1)

struct bar

{

float f;

char c;

};

In this case sizeof(bar) would be 5 bytes. However packing structs is dangerous, it can break harmless looking code.

#pragma pack(1)

struct bar

{

float f;

char c;

};

bar barArray[2];

This might seem safe, but what is the address of barArray[1].f? It’s no longer aligned to 4 bytes, and will suffer from misalignment issues mentioned above.

There’s no real way to avoid alignment issues other than being mindful of them when designing data structures and writing code. Generally alignment isn’t an issue until you start doing odd memory casts like in our above examples. Accessing variables in a straightforward manner, without casting different types through arbitrary pointers as above, will avoid most cases where misalignment will cause errors.

Hardware Access

Some hardware, like the GPU, the sound processor and even the DVD drive have even stricter alignment restrictions. Some times they have very large alignment requirements like 32 bytes or even 128 or 4096 bytes or more. The reasons are complicated, but have to do with how memory is transferred between devices and accessed at a hardware level. For example the data for a texture will may have to start at a physical page boundary, or 4Kb alignment. This means that when we load and allocate memory for the texture we have to be aware of this requirement. Like the alignment of regular data types, often the hardware will transparently read the wrong data if misaligned. Needless to say, you have to be familiar with the requirements of the hardware you’re interacting with.

As far as memory management is concerned, hardware requirements mean that we’ll need to provide the functionality to allocate memory at various alignments.

Global Variables and Arrays

What if you need a global variable, or data buffer to have a specific alignment? Like some global buffer you want to send to the GPU?

For simplicity’s sake it’s usually easier to avoid having to specify alignment directly, but sometimes it is unavoidable. Most compliers support some extension to align data, even withing structs or classes. GCC supports alignment like so:

int buffer[1000] __attribute__ ((aligned (16))); // create a buffer with 16 bytes alignment (&buffer % 16 == 0)

struct foo

{

char c;

float simdfloat[4] __attribute__((aligned(16))); // make sure our vectorized data is aligned

};

Waste

We’ve seen that data structures get padded to meet alignment requirements, and that we may have to allocate blocks of memory with large alignment requirements. This means that we might be wasting memory to meet proper alignment requirements. While 3-4 bytes here and there may not seem like much, it can add up quickly. Especially with larger alignment requirements. There isn’t much we can do but be aware of the issue and try to limit waste by making structs that don’t need padding and allocating and buffers that we don’t waste too much.

Working with Alignment

How do we know the alignment of any given type? You pretty much have to read the CPU and hardware documentation specific to your system. However some general rules of thumb are:

- Basic data types require an alignment equal to their size. E.g. a float (4 bytes) required 4 byte alignment. A 4-float SIMD vector type (16 bytes) will likely require 16 bytes alignment.

- Hardware buffers require an alignment equal to a cache line. If a CPU has 128-byte cache lines, you’ll probably find that many devices require 128- byte aligned data.

Also some general advice when designing data structures. Try to make structs multiples of their largest aligned member (e.g. 16 bytes for SIMD vectors), add padding if required. This can make allocating arrays of structures less awkward. But don’t go crazy, for many basic structs alignment doesn’t cause issues – compilers aren’t completely stupid and usually do the right thing.

Requirements

We’ve gone over some of the main issues specific to memory management on consoles, lets do quick overview to identify what requirements required of our memory management system.

- Multiple types physical memory require we be able to specify what type of memory we want to allocate

- We’ll need a way of indicating whether memory is cached or not

- We’ll need a way of specifying alignment

If we compare our requirements against what is provided by the standard C runtime, malloc, we’ll see that there’s no way of specifying memory type, no way of specifying caching and no way of specifying alignment! We’ll look at solutions to this and other issues when we start designing our memory manager in the next part of this series.

Just point out that virtual memory is not the same as paging, and that current generation consoles use virtual memory (at least Xbox 360 does).

Comment by Anonymous — September 5, 2008 @ 4:28 pm

> The complier will by default pad all structs and classes,

> usually to 4 or 8 bytes or so.

> The main reason is to avoid misaligned data.

This is, uh, wrong. sizeof(bar) is 8 because it can be put in arrays, as you mention later. Size of struct { int8_t; int8_t; int8_t; } will be 3 bytes, size of struct { int16_t; int16_t; int8_t; } will be 6 bytes (assuming gcc/msvc), no 4/8 byte alignment here.

Comment by Arseny Kapoulkine — January 3, 2009 @ 11:12 am

No, it’s not wrong, and assuming 4 byte alignment for “standard” (float, int, pointers) types is a good rule of thumb. (Although as 64-bit becomes more and more common it may not be true anymore).

I state at the end of my post that “Basic data types require an alignment equal to their size” which is generally true – and is exactly what you claim with your examples.

My foo/bar example was specifically uses floats, since floating point variables often have more restrictive alignment requirements than integer variables. So, nothing is wrong here, but it may not reflect what you see with x86 architectures.

Comment by systematicgaming — January 3, 2009 @ 12:20 pm